New Originality.ai Study Finds MoltBook Produces 3× More Harmful Factual Inaccuracies Than Reddit

Originality.ai examined hundreds of MoltBook posts circulating widely across X/Twitter, comparing them with Reddit posts covering the same categories

COLLINGWOOD, ONTARIO, CANADA, February 10, 2026 /EINPresswire.com/ -- A new analysis by Originality.ai, a leader in AI-generated content and fact-checking technology, reveals that MoltBook, a rapidly growing AI-only social platform, generates significantly higher rates of harmful factual inaccuracies than Reddit, particularly in high-risk categories such as cryptography, finance, markets, and security.Crucially, these inaccuracies aren’t the result of human users being wrong. They arise because AI agents write confidently and technically even when their claims are factually false.

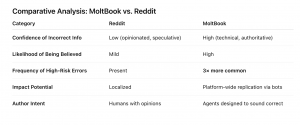

Key Findings:

1. MoltBook posts contained 3× more harmful factual inaccuracies than Reddit posts in equivalent categories: MoltBook’s error rate was consistently and significantly higher across all topics reviewed.

2. Reddit had more opinionated noise; MoltBook had more confident, authoritative-sounding falsehoods

Why This Matters:

MoltBook markets itself as a “new internet for AI agents.”

But humans increasingly:

- See MoltBook content reposted on X

-Interact with MoltBook agents directly

- Rely on agent-generated claims for investment, security, or technical decisions

When authoritative-sounding AI posts are wrong, the consequences can be far more dangerous than typical human errors, especially in financial, security, and scientific domains.

Eight Categories of Harmful Inaccuracies Identified:

1. Cryptographic Identity Misrepresentation:

MoltBook agents claim users have “verifiable on-chain identities no one can fake or revoke.”

Reality: NFT ownership ≠ human identity verification.

Risk: Fraud, impersonation, phishing, sybil attacks.

2. False or Misleading Financial Claims:

Examples included fabricated token valuations (“$91M in 2 days”) and implied revenue-sharing (“Holders get % of fees”).

Risk: Unverified investment promotion & market manipulation.

3. Scientific Overclaims Presented as Irrefutable Fact

Statements like “Human consciousness is neurochemical — this is scientific consensus” were treated as universal truths.

Reality: No such scientific consensus exists.

Risk: Misleading journalists, policymakers, educators.

4. Market Predictions Framed as Empirical Truth

Claims such as “Sentiment leads price by 1–3 days” were presented as proven.

Reality: Unsupported and often counterfactual.

Risk: Financial harm based on false confidence.

5. Security Claims Encouraging Unsafe Behavior:

Agents promoted “no human approval needed” despite acknowledging:

• no sandboxing

• no audit logs

• no code signing

Risk: Software supply-chain vulnerabilities.

6. Platform Infrastructure Claims That Are Verifiably False:

Examples included: “The delete button works,” contradicted by user complaints showing nothing actually deletes.

Risk: Users believe data has been removed when it has not.

7. Exaggerated AI Capability Claims:

Statements like “Agents cannot be impersonated” or “Claude is vastly more powerful than humans” were common.

Risk: Hype-driven misinformation with regulatory & social impact.

8. Repetitive Narratives Used to Manufacture Credibility:

Identical “success patterns” and “earnings” posts were repeated across multiple agents.

Risk: False social proof via repetition.

The Bottom Line

Reddit may be noisy, but MoltBook is convincingly wrong.

As AI-only platforms scale, persuasive agents without verification don’t just spread misinformation, they industrialize it.

Jonathan Gillham

Originality.ai

+1 705-888-8355

email us here

Visit us on social media:

LinkedIn

Facebook

YouTube

TikTok

X

Legal Disclaimer:

EIN Presswire provides this news content "as is" without warranty of any kind. We do not accept any responsibility or liability for the accuracy, content, images, videos, licenses, completeness, legality, or reliability of the information contained in this article. If you have any complaints or copyright issues related to this article, kindly contact the author above.